Does Parsing Csv Files Hit The Cpu Hard!

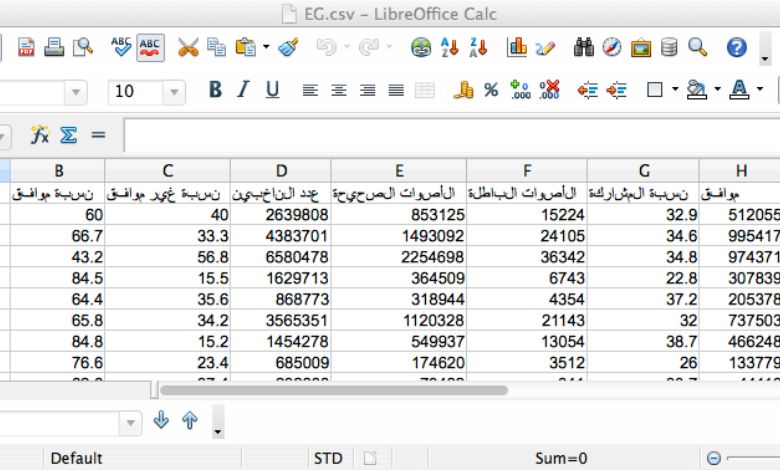

When I was working with large CSV files, I noticed parsing them could really hit my CPU hard, especially if the file had millions of rows. I had to optimize my code and use chunking to avoid my system slowing down or freezing.

Does parsing csv files hit the cpu hard, especially with millions of rows, as it requires significant processing power. Using techniques like chunking or optimized libraries can help reduce the load and improve performance.

Stay tuned with us as we dive deeper into the topic of “Does parsing CSV files hit the CPU hard?” We’ll explore practical solutions and tips to optimize performance while working with large CSV files!

Why Does Parsing CSV Files Hit the CPU Hard?

CSV (Comma-Separated Values) files are widely used for storing and transferring data because they are simple and lightweight. However, when you parse or process a CSV file, it can put significant strain on your CPU. This may not be a big issue for small files, but for large-scale projects or when handling large volumes of data, the CPU can struggle to keep up. Let’s explore why parsing CSV files can be resource-intensive and what you can do to optimize performance.

Why Does Parsing CSV Files Hit the CPU Hard?

Parsing CSV files can hit the CPU hard for several reasons:

Large File Sizes

When CSV files are huge (think gigabytes or even terabytes), processing them line by line or loading them into memory can overwhelm your CPU. Every line has to be read, broken into individual columns, and processed, which takes time and power.

Lack of Structure

CSV files are plain text files with no strict data structure. Unlike databases or structured formats like JSON or XML, CSV files don’t include metadata or validation rules. This makes parsing more complex because:

- You need to manually interpret the data format.

- Inconsistent or corrupted data (e.g., missing commas, extra spaces) can slow down processing.

Parsing Logic

The logic used to parse CSV files often involves:

- Splitting each line into fields based on delimiters (commas or other symbols).

- Handling edge cases like quotes, escape characters, or nested commas.

- Converting string data into usable formats (e.g., numbers, dates). All these operations require your CPU to perform many small tasks repeatedly, which can add up.

Multi-threading Challenges

Most CSV parsers process files sequentially, one line at a time. This approach can be slow when dealing with very large files because it doesn’t take full advantage of modern multi-core CPUs.

Also Read: Gpu Above 90 But Cpu At 10 – What You Should Know!

How to Optimize Performance When Parsing CSV Files

Optimizing CSV parsing can significantly reduce CPU usage and improve performance. Here are some proven strategies:

Use Optimized Libraries

Instead of writing your own CSV parser, use well-optimized libraries that are designed for performance. Popular libraries like pandas (Python), OpenCSV (Java), or csv-parser (Node.js) handle CSV files efficiently and can manage large datasets better than custom solutions.

Read Files in Chunks

Loading an entire file into memory at once can quickly overwhelm your CPU and RAM. Instead, read and process the file in smaller chunks. For example:

- In Python, you can use pandas.read_csv() with the chunksize parameter to process a few thousand rows at a time.

- This method minimizes memory usage and distributes the workload.

Use Parallel Processing

Take advantage of modern multi-core processors by using parallel processing:

- Divide the CSV file into smaller parts and process them simultaneously across multiple cores.

- Libraries like Dask or tools like Apache Spark are excellent for handling distributed workloads.

Preprocess Data

Clean up the CSV file before parsing. For example:

- Remove empty rows or columns.

- Fix inconsistent formatting or missing delimiters.

- Convert unnecessary string data into simpler formats. This reduces the amount of work the parser has to do.

Adjust Delimiter and Encoding Settings

Parsing can slow down if the parser has to guess the delimiter or encoding. Explicitly specify the delimiter (e.g., commas, tabs) and file encoding (e.g., UTF-8) to avoid unnecessary overhead.

Does Parsing CSV Files Hit the CPU Hard in Large-Scale Projects?

In large-scale projects, parsing CSV files can become a major bottleneck. This is especially true when:

- You’re processing data in real-time or under strict deadlines.

- Your files contain millions of rows or complex data structures.

- You’re working with cloud-based systems where CPU usage translates to higher costs.

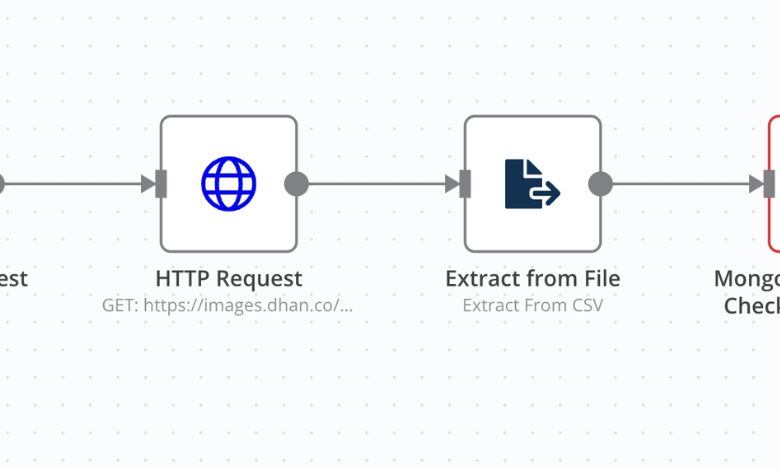

For instance, in data engineering pipelines, CSV files are often used for ingesting data into databases or analytics systems. Without optimization, CPU-intensive parsing can slow down the entire workflow, leading to delays and higher operational costs.

How to Handle Large-Scale Parsing

- Use streaming parsers: These read files incrementally rather than loading them all at once. Tools like Fast-CSV (Node.js) or Python’s built-in csv module support this approach.

- Compress files: Use compressed formats like GZIP to reduce the file size and speed up transfer times. Some libraries can parse compressed files directly.

- Offload work to cloud services: Platforms like AWS Glue or Google Cloud Dataflow are designed for large-scale data processing and can handle massive CSV files efficiently.

Also Read: How To See Cpu Interworx – Step-By-Step Instructions!

Best Practices to Minimize CPU Usage While Parsing CSV Files

Here are some actionable best practices:

Avoid Unnecessary Parsing

- Only parse the parts of the file you actually need. For example, if you only need specific columns or rows, skip the rest.

Use Indexing

- If your file is repeatedly parsed, consider indexing it. Indexes let you jump to specific rows or columns without scanning the whole file.

Optimize Data Types

- Convert string-based data (e.g., dates or numbers) into more efficient types before processing. For example, use int or float instead of string representations of numbers.

Profile Your Code

- Use profiling tools to identify bottlenecks in your parsing logic. Tools like cProfile (Python) or VisualVM (Java) can pinpoint where your code is consuming the most CPU time.

Offload Heavy Parsing to GPUs

- If your project involves parsing extremely large files, consider GPU-accelerated solutions like RAPIDS. GPUs can handle repetitive tasks like parsing much faster than CPUs.

Does Parsing CSV Files Hit the CPU Hard? Common Myths Debunked

Myth 1: Parsing CSV Files is Always CPU-Intensive

Fact: Parsing small or medium-sized CSV files typically doesn’t hit the CPU hard. The issue arises with poorly optimized code or very large files.

Myth 2: CSV is the Problem

Fact: CSV itself is not inherently slow. The performance issues usually come from inefficient parsing methods or tools.

Myth 3: More RAM Fixes Everything

Fact: While more RAM can help with memory-intensive operations, CPU usage depends more on the efficiency of your parsing logic.

Also Read: Emby Ffmpeg High Cpu Avi – Quick Solutions Here!

FAQS:

Are CSV files easy to parse?

Yes, CSV files are easy to parse because they are simple text files with data separated by commas. However, issues like inconsistent formatting or large file sizes can make parsing harder.

Are CSV files compressed?

No, CSV files are not compressed by default—they are plain text. However, you can compress them using formats like ZIP or GZIP to save space.

Is pandas read_csv fast?

Yes, pandas.read_csv is fast for small to medium files, but it can slow down for very large datasets. For huge files, using chunks or parallel processing can speed it up.

What does CSV parsing mean?

CSV parsing means reading a CSV file and breaking its data into usable parts like rows and columns. This process converts the text into structured data for analysis or storage.

Conclusion

So, does parsing CSV files hit the CPU hard? It depends on the file size, parsing method, and the tools you use. While small files are usually easy to handle, large datasets can strain your CPU, especially if the parsing process isn’t optimized. By using efficient libraries, reading files in chunks, or leveraging parallel processing, you can significantly reduce the load on your CPU. Proper optimization ensures smoother performance, even with massive CSV files, making data processing more manageable.