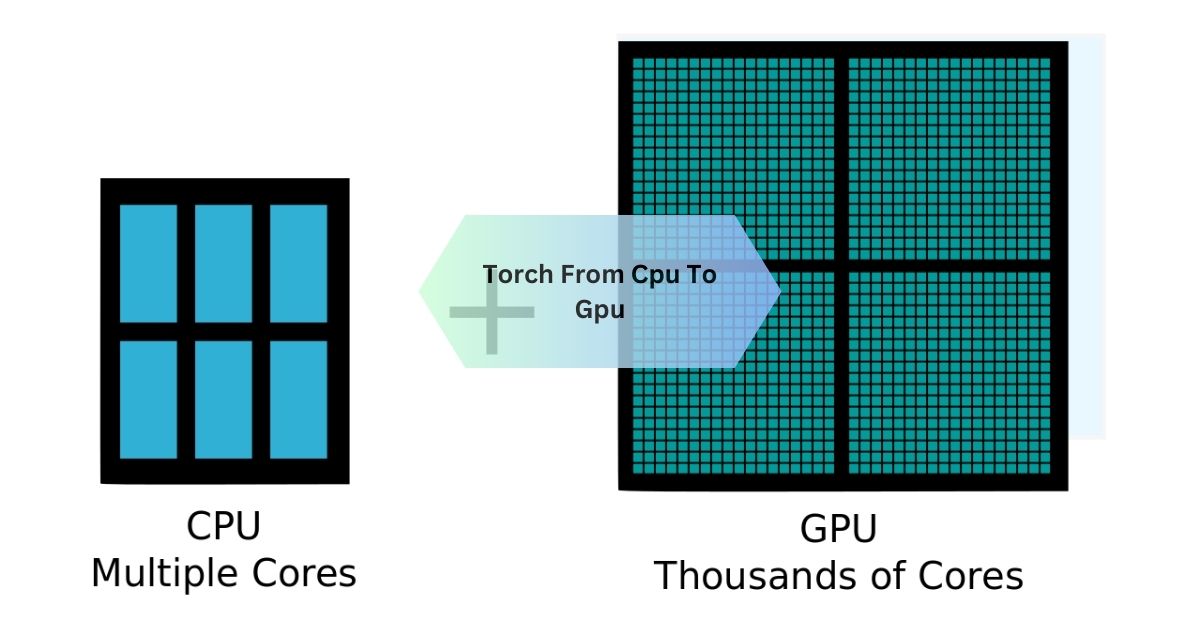

Torch From Cpu To Gpu – Simple Steps To Faster Ai!

When I first moved my PyTorch model from the CPU to the GPU, I was amazed at how much faster it trained—it felt like cutting hours of waiting into minutes. At first, I forgot to call .cuda() on my tensors, but once I figured that out, everything clicked!

To switch from torch from cpu to gpu, use .to(device) or .cuda() to move your model and tensors to the GPU for faster processing. This improves training speed significantly, especially for large datasets.

Stay tuned with us as we dive into the details of torch from CPU to GPU, exploring how to make your models run faster and more efficiently using GPU acceleration!

How do I transfer a PyTorch model from CPU to GPU?

To move your PyTorch model from CPU to GPU, you need to call the .to(device) method or .cuda(). First, check if a GPU is available using torch.cuda.is_available(). Then, define a device variable like this: device = torch.device(“cuda”).

Load your model and use model.to(device) to transfer it to the GPU. Ensure your input tensors are also moved to the GPU using .to(device) or .cuda(). This will enable your model to leverage the GPU for faster computation.

Also Read: Gpu Above 90 But Cpu At 10 – What You Should Know!

What is the purpose of .cuda() in PyTorch?

.cuda() is a PyTorch method used to transfer models or tensors to the GPU. It ensures the computations are done using the GPU, which is much faster than the CPU for heavy tasks. You can use .cuda() directly on models or tensors, like model.cuda() or tensor.cuda().

It’s a shorthand for moving data to the default GPU. However, for flexibility, using .to(device) is preferred because it allows specifying devices like “cuda:0” for multiple GPUs. Both achieve the same result of enabling GPU-based computation.

What Is The Role Of .To(Device) In Pytorch For Gpu Usage?

. Switch Between CPU and GPU

When you create a model or tensor in PyTorch, it is placed on the CPU by default. If you want to use the GPU (which is much faster for large computations), you must explicitly move the model and data to the GPU.

For example:

python

CopyEdit

device = torch.device(“cuda”) # Use GPU

model = model.to(device) # Move model to GPU

input_tensor = input_tensor.to(device) # Move input data to GPU

Ensure Compatibility

Both the model and tensors need to be on the same device (either CPU or GPU). If one is on the CPU and the other on the GPU, PyTorch will raise an error like:

RuntimeError: Expected all tensors to be on the same device.

By using .to(device), you ensure everything runs on the same device without conflicts.

Device Flexibility

The .to(device) method allows you to write flexible and reusable code. For instance, if a GPU is not available, you can fall back to the CPU easily:

python

CopyEdit

device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)

model = model.to(device)

input_tensor = input_tensor.to(device)

This way, your code can run on any machine, with or without a GPU.

Handle Multi-GPU Systems

If you’re working on a system with multiple GPUs, .to(device) helps you specify which GPU to use. For example, you can move your data to cuda:0 (GPU 0) or cuda:1 (GPU 1) as needed:

python

CopyEdit

device = torch.device(“cuda:0”) # Use the first GPU

model = model.to(device)

Work on Both Models and Tensors

The .to(device) method works not only for models but also for individual tensors or batches of data. For example:

python

CopyEdit

# Moving a tensor to GPU

tensor = tensor.to(device)

This ensures that the data is processed on the GPU for better performance during training or inference.

Supports Data Type Conversion

Besides moving data to a device, .to() can also change the data type (e.g., from float32 to float16) for precision optimization. For example:

Also Read: How To See Cpu Interworx – Step-By-Step Instructions!

How can I check if a GPU is available in PyTorch?

To check if a GPU is available, use the function torch.cuda.is_available(). This will return True if a compatible GPU is detected and ready for use. You can also check the GPU details by calling torch.cuda.get_device_name().

If you’re working with multiple GPUs, use torch.cuda.device_count() to see how many are accessible. Make sure you have the proper GPU drivers and CUDA installed for compatibility. If no GPU is detected, your model will default to the CPU.

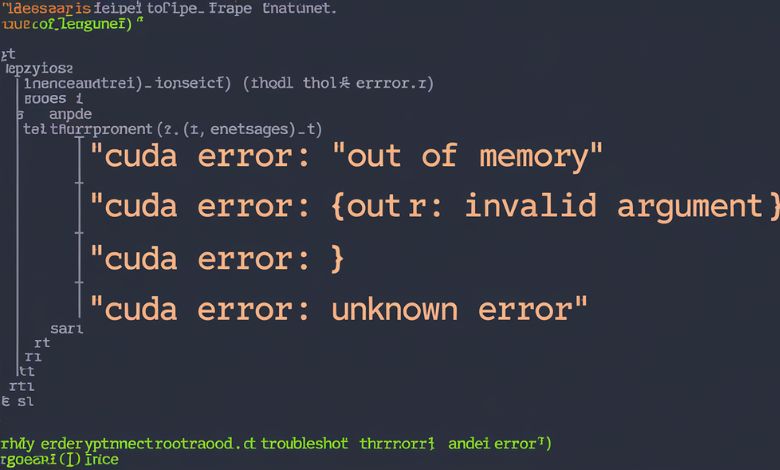

What Are Common Errors When Switching From Cpu To Gpu In Pytorch?

Forgetting to Move Tensors to the GPU

When you move your model to the GPU using .to(device) or .cuda(), the input tensors also need to be moved to the same GPU. If they are still on the CPU, you’ll get an error like:

RuntimeError: Expected all tensors to be on the same device.

Mismatch Between Model and Tensors

If your model is on one GPU (e.g., “cuda:0”) but your tensors are on another GPU or the CPU, this will lead to a mismatch error.

Solution: Ensure both the model and tensors are on the same device. Use a device variable consistently like this:

python

CopyEdit

device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)

model = model.to(device)

input_tensor = input_tensor.to(device)

Missing GPU Support

If your PyTorch installation doesn’t support CUDA, or your GPU drivers and CUDA toolkit are not properly installed, you won’t be able to use the GPU. You’ll see errors like:

AssertionError: Torch not compiled with CUDA enabled.

Solution: Install the correct version of PyTorch with CUDA support. Use PyTorch’s installation guide to choose the right version for your setup. Make sure your GPU drivers are up to date.

Running Out of GPU Memory

GPUs have limited memory, and large models or input data can cause RuntimeError: CUDA out of memory.

Solution:

- Reduce the batch size during training or inference.

- Use torch.cuda.empty_cache() to clear unused memory.

- Consider using mixed precision training with torch.cuda.amp to save memory.

Forgetting to Use torch.no_grad() for Inference

If you’re running inference but forget to disable gradient calculation, it will use unnecessary GPU memory.

Solution: Wrap your inference code in torch.no_grad(). Example:

python

CopyEdit

with torch.no_grad():

outputs = model(input_tensor)

Multi-GPU Usage Issues

If you have multiple GPUs but don’t specify which one to use, PyTorch may default to the first GPU, causing confusion or memory conflicts.

Solution: Specify the GPU explicitly by using torch.device(“cuda:0”) or the appropriate GPU index.

Device-Specific Operations

Some operations (like NumPy functions) may run on the CPU by default, leading to device mismatch issues when used with GPU tensors.

Solution: Ensure you convert data back and forth correctly. Use .cpu() if you need to process tensors with CPU-based libraries like NumPy. Example:

python

CopyEdit

numpy_array = tensor.cpu().numpy()

Can tensors automatically move from CPU to GPU in PyTorch?

No, tensors do not automatically move from CPU to GPU in PyTorch. You need to manually transfer them using .to(device) or .cuda(). For example, if your model is on the GPU, your input tensors must also be moved to the same GPU using tensor.to(device).

If the tensors are not on the GPU, you’ll encounter errors during computation. By explicitly moving tensors, you ensure they align with your model’s device for smooth processing.

How do I verify if my PyTorch model is running on the GPU?

To check if your model is running on the GPU, use next(model.parameters()).device. This will tell you whether the model’s parameters are on the CPU or GPU. If it shows cuda, the model is on the GPU.

Additionally, you can confirm by printing your device variable or ensuring all tensors are also on cuda. Verifying this is important to ensure your model is utilizing the GPU for faster computation.

Also Read: Emby Ffmpeg High Cpu Avi – Quick Solutions Here!

FAQS:

How to change torch CPU to GPU?

To change from CPU to GPU in PyTorch, use .to(device) or .cuda() to move your model and tensors to the GPU. First, define the device using device = torch.device(“cuda”) and then apply it to your model and data.

Does Torch automatically use GPU?

No, PyTorch does not automatically use the GPU. You must explicitly move your model and tensors to the GPU using .to(device) or .cuda() to enable GPU computation.

How do I check which device my PyTorch model is on?

You can check the device of your model by using next(model.parameters()).device. This tells you whether it’s on the CPU or GPU.

Can I use PyTorch without a GPU?

Yes, PyTorch works perfectly on a CPU. It will just be slower compared to running on a GPU for large models or datasets.

How do I move a tensor back from GPU to CPU in PyTorch?

Use .cpu() to move a tensor back to the CPU, like tensor = tensor.cpu(). This is useful for converting tensors to NumPy arrays.

What happens if I run a GPU model on a system without a GPU?

If no GPU is available, PyTorch will throw an error unless you set up a fallback to the CPU using device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”).

Conclusion

using torch from CPU to GPU is a simple but powerful way to speed up deep learning tasks. By transferring models and tensors to the GPU with .to(device) or .cuda(), you can significantly enhance performance and handle larger datasets more efficiently.